Purpose

This page describes an installation process of vanilla Kubernetes on Ubuntu virtual machines. This will install a 3 node cluster, with one control plane node and two worker nodes.

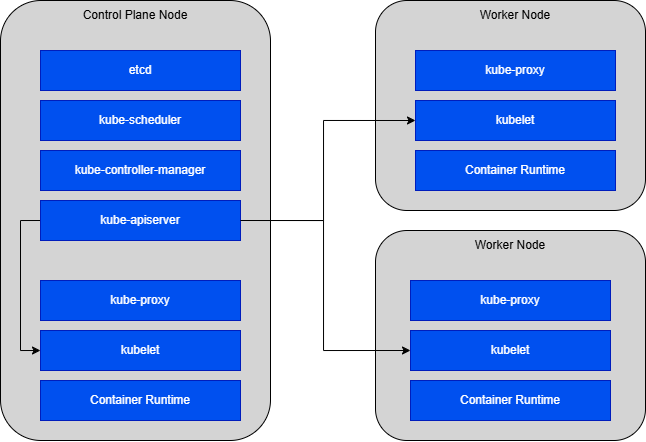

Kubernetes components

Firstly, let’s take a look at each Kubernetes component and they function.

Kubernetes nodes can be divided into categories of control plane nodes and worker-nodes.

Each node, regardless if it operates the control plane or is a worker node contains a container runtime, kubelet and kube-proxy for the core operations of running and connecting containers.

The control plane nodes contain functions that enable the overall configuration and operation of the cluster.

The Container Runtime

The container runtime runs the containers on each node. This implementation uses Containerd and Runc.

Runc directly creates and runs containers as per the Open Container Initiative (OCI) Specification.

Containerd manages the container lifecycle on the host system. This includes (but not limited to) pulling and managing images, coordinating the creation of containers (through Runc or a similar process) and low level networking.

In short, containerd is the interface that is used for managing the underlying container process which is executed by runc.

Kubelet

The Kubelet is an agent that runs on each node within the cluster. It ensures that Kubernetes managed and specified containers are running within pods.

The Kubelet interacts with the container runtime to spawn and manage containers through a Container Runtime Interface (CRI) plugin. The CRI enables the CRI plugin enables the kubelet to interact with a wide variety of container runtimes. Though we are using containerd and runc specifically in this implementation.

Kube-proxy

Kube-proxy provides networking functionality for pods within each node, allowing for communication between components both inside and outside of the Kubernetes cluster.

Etcd

Etcd is a highly-available key value store for storing all Kubernetes cluster data, including but not limited to: Cluster state information, pod and deployment information, node information and secrets and ConfigMaps.

Kube-controller-manager

The kube-controller-manager is a core component which controls the various aspects of a Kubernetes cluster and ensures the Kubernetes cluster maintains its configured state.

While Etcd stores the desired state of the cluster, the kube-controller-manager ensures the cluster runs at that defined state.

Various controllers are used for managing the state of particular configuration items, such as controllers for Job objects that result in Pod creation and replication controllers that ensure the specified number of pods are running at all times.

Kube-scheduler

The kube-scheduler assigns pods against nodes. It does this by watching for pods that have no nodes assigned and then finds the best node to allocate against the unassigned pod. This is conducted in a 2-step operation:

- A filtering operation which identifies which nodes the pod can be run on

- A scoring operation which selects the best available node for the pod to run on.

Kube-apiserver

Finally, the Kubernetes API server, a central component that glues together all other Kubernetes components. The kube-api server provides an api that allows users, internal components and external components to communicate with each other.

Prerequisites

The prerequisites for following along with this page are:

- 3 virtual (or physical) machines, each with:

- Ubuntu 24.x

- 2GB+ memory per machine

- 2 CPUs or more for the control plane VM (x1)

- 1 CPU or more for the worker node VMs (x2)

- Hostnames set on each VM

- Full network connectivity between all VMs

- IPv4 packet forwarding enabled on all nodes, see below.

- Network filtering (iptables) for bridged traffic, see below.

- A number of ports open on each machine type (control or node), see below.

- Ensure swap memory is turned off

Hostnames set on each VM

Setting the hostname is straightforward. Run the following:

# modify the hostnames within /etc/hostname. this implementation uses node-00, node-01 and node-02.

sudo vim /etc/hostname

IPv4 Packet Forwarding

IPv4 packet forwarding is required on all nodes which allows the proper routing and forwarding of packets between Kubernetes cluster components.

To enable, run the following on every node:

# configuration to enable config persistance across reboots

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

EOF

# apply the packet forwarding config without a reboot

sudo sysctl --system

# confirm that the configuration has been applied

sysctl net.ipv4.ip_forwardNetwork Filtering for Bridged Traffic

This implementation uses Flannel as the overlay network. The overlay network creates a virtual network that sits over the top of the existing node network.

Containers on a node will communicate with each other on this overlay network through layer 2 bridged traffic, and communicate with other nodes through layer 3 IP address based traffic.

To enable the layer 2 bridged traffic to be subject to iptables, we need to enable networking for bridged traffic on each node that uses the Flannel overlay network (all of them).

To enable this network filtering for bridged traffic, run the following commands on each node:

# load the br_netfilter kernel module

sudo modprobe br_netfilter

lsmod | grep br_netfilter

# ensure the kernel module loads on boot

echo "br_netfilter" | sudo tee -a /etc/modules

# tell the kernal to filter bridged ipv4 and ipv6 traffic

sudo sysctl -w net.bridge.bridge-nf-call-iptables=1

sudo sysctl -w net.bridge.bridge-nf-call-ip6tables=1

# make the change persistent

echo "net.bridge.bridge-nf-call-iptables=1" | sudo tee -a /etc/sysctl.conf

echo "net.bridge.bridge-nf-call-ip6tables=1" | sudo tee -a /etc/sysctl.conf

sudo sysctl -pPort Requirements

The tcp ports that need to be open are:

Control plane node:

- 6443 – kube-apiserver

- 2379:2380 – etcd and etcd in a highly available cluster

- 10250 – kubelet api

- 10259 – kube-scheduler

- 10257 – kube-controller-manager

Worker node:

- 10250 – kubelet api

- 10256 – kube-proxy metrics

- 30000:32767 – NodePort services

In this implementation UFW is used to control the Ubuntu host firewall. Also ensure the ports for ssh are open so you don’t lock yourself out of the VM:

# open ports on control nodes

sudo ufw enable

sudo ufw allow ssh

sudo ufw allow 6443/tcp

sudo ufw allow 2379:2380/tcp

sudo ufw allow 10250/tcp

sudo ufw allow 10259/tcp

sudo ufw allow 10257/tcp

# open ports on worker nodes

sudo ufw enable

sudo ufw allow ssh

sudo ufw allow 10250/tcp

sudo ufw allow 10256/tcp

sudo ufw allow 30000:32767/tcp

# to check a particular port is working after a service has been started, for example the api server:

nc 127.0.0.1 6443 -v

Ensure Swap Memory is Turned Off

Kubernetes relies on the kubelet to monitor and manage node resources such as CPU and memory. Having dynamic (swap) memory turned on adds a layer of complexity that interferes with Kubernetes resource management.

To check if swapon is enabled an disable it if it is enabled:

# check if it's enabled, if swap is 0B in total it is not enabled

free -h

# or ensure there is no output from the following

sudo swapon --show

# if it is enabled, modify /etc/fstab and remove any swap related lines

sudo vim /etc/fstab

# look for and remove lines such as

# UUID=xxxx-xxxx-xxxx-xxxx none swap sw 0 0

# reboot the machine and check for swap memory again

sudo reboot

free -hPrerequisites Summary

These prerequisites were focused on configuration the underlying host OS and networking for Kubernetes. Once complete proceed to step 1.

Step 1 – Install Kubernetes tools and kubectl

Here, the kubelet, kubeadm and kubectl will be installed on each node.

Kubelet: The process that interacts with the container runtime for the management and operation of pods and containers

Kubectl: A command line interface for communicating with the kube-apiserver. This is not required on worker nodes but can be useful for debugging and learning.

Kubeadm: A tool used for bootstrapping Kubernetes clusters to a series of best practices. It is the tool used in this implementation for creating the control plane node and joining two worker nodes.

To install, run the below on all three nodes:

# Add the kubernetes apt sources

sudo apt-get install -y apt-transport-https ca-certificates curl gpg

# If the directory `/etc/apt/keyrings` does not exist, it should be created before the curl command, read the note below.

# sudo mkdir -p -m 755 /etc/apt/keyrings

curl -fsSL https://pkgs.k8s.io/core:/stable:/v1.32/deb/Release.key | sudo gpg --dearmor -o /etc/apt/keyrings/kubernetes-apt-keyring.gpg

echo 'deb [signed-by=/etc/apt/keyrings/kubernetes-apt-keyring.gpg] https://pkgs.k8s.io/core:/stable:/v1.32/deb/ /' | sudo tee /etc/apt/sources.list.d/kubernetes.list

# Install the components

sudo apt-get update

sudo apt-get install -y kubelet kubeadm kubectl

sudo apt-mark hold kubelet kubeadm kubectl

# Enable and run the kubelet as a service

# enable kublet service before running kubeadm

sudo systemctl enable --now kubeletStep 2 – Install the container runtime

Containerd and runc are used in this implementation for managing and running containers on the host OS.

To install containerd, run the below on each node. Note that we are setting the SystemdCgroup setting to true to enable containerd to manage containers through systemd.

# download containerd

wget https://github.com/containerd/containerd/releases/download/v1.7.24/containerd-1.7.24-linux-amd64.tar.gz

wget https://github.com/containerd/containerd/releases/download/v1.7.24/containerd-1.7.24-linux-amd64.tar.gz.sha256sum

# check the sha256sum

sha256sum -c containerd-1.7.24-linux-amd64.tar.gz.sha256sum

# install containered

sudo tar Cxzvf /usr/local containerd-1.7.24-linux-amd64.tar.gz

# generate the standard containerd configuration

sudo mkdir /etc/containerd

sudo containerd config default > /etc/containerd/config.toml

# set SystemdCgroup to true in config.toml

vim /etc/containerd/config.toml

# under: [plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options]

# set SystemdCgroup: true

# enable containerd to run as a systemd service

wget https://raw.githubusercontent.com/containerd/containerd/main/containerd.service

sudo mkdir /usr/local/lib/systemd/system -p

sudo cp containerd.service /usr/local/lib/systemd/system/containerd.service

systemctl daemon-reload

systemctl enable --now containerdNow run the following to install runc.

# install runc

wget https://github.com/opencontainers/runc/releases/download/v1.2.2/runc.amd64

install -m 755 runc.amd64 /usr/local/sbin/runc

# test container can be run with runc

sudo ctr images pull docker.io/library/alpine:latest

sudo ctr images ls

sudo ctr run --runtime io.containerd.runc.v2 --tty --rm docker.io/library/alpine:latest test-container /bin/shStep 3 – Bootstrap the cluster

Generate bootstrapping configuration

Before running kubeadm to bootstrap the cluster, we want to generate and modify the standard configuration to:

- Set the correct control plane node hostname, node-00.

- Set the ip address of the api endpoint. This is the control plane node ip address, and this is 10.0.0.10 in this example.

- Specify the pod network subnet (the flannel overlay network). I’m choosing a 192.168.0.0./16 subnet.

- Set the cluster to use systemd managed containers to match the containerd configuration.

Run the following on the control plane node.

# generate kube config

kubeadm config print init-defaults > kubeadm-config.yaml

# modify the kubeadm-config.yaml file

sudo vim ~/kubeadm-config.yaml

# Modify the hostname, api server address, pod network subnet and systemd setting. Additions/modifications are in bold.

localAPIEndpoint:

advertiseAddress: 10.0.30.125

bindPort: 6443

apiVersion: kubeadm.k8s.io/v1beta4

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 10.0.0.10 # control node address

bindPort: 6443

nodeRegistration:

criSocket: unix:///var/run/containerd/containerd.sock

imagePullPolicy: IfNotPresent

imagePullSerial: true

name: node-00 # control node hostname

taints: null

timeouts:

controlPlaneComponentHealthCheck: 4m0s

discovery: 5m0s

etcdAPICall: 2m0s

kubeletHealthCheck: 4m0s

kubernetesAPICall: 1m0s

tlsBootstrap: 5m0s

upgradeManifests: 5m0s

---

apiServer: {}

apiVersion: kubeadm.k8s.io/v1beta4

caCertificateValidityPeriod: 87600h0m0s

certificateValidityPeriod: 8760h0m0s

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

encryptionAlgorithm: RSA-2048

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.k8s.io

kind: ClusterConfiguration

kubernetesVersion: 1.31.0

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

podSubnet: 192.168.0.0/16 # overlay/pod network

proxy: {}

scheduler: {}

---

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

cgroupDriver: systemdBootstrap the cluster with kubeadm

Now that we have:

- Completed all prerequesites

- Installed the container runtime

- Installed kubeadm, the kublet and kubectl

- Generated and modified a kubeadm confiugariotn

It’s time to finally create the cluster. Run the following on the Control Node only.

# initialise cluster

kubeadm init --config kubeadm-config.yamlIf created successfully, you will see the following message:

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 10.0.0.10:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:1b4da30281a13c5d1a93bf6fce9531bace93e9ccee8201aad375cdf9e41cd13aRun the commands above to allow you to use kubectl as either a normal user or root user. Don’t run the command to join a worker node just yet.

Common Error

When generating the containerd config in the previous step, there might be a mismatch between versions of the pause container from the registry.k8s.io registry.

The pause container is the shell container which creates a network namespace for each pod.

When running kubeadm, during the prefilght chekcs it will point out any mismatches in versions. Change the version to the expected version within the containerd config and then restart containerd.

with sudo containerd config default > /etc/containerd/config.toml

# change the pause container to the expected version

sudo vim /etc/containerd/config.toml

# sandbox_image = "registry.k8s.io/pause:3.10"Step 4 – Install the Flannel overlay network

Again, the implements an overlay network for pod-to-pod communication.

Generate the Flannel definition with the following commands, and ensure the Network is set to the pod network you set in the kubeadm configuration.

wget https://raw.githubusercontent.com/flannel-io/flannel/master/Documentation/kube-flannel.yml

# modify kube-flannel with correct pod network

net-conf.json: |

{

"Network": "192.168.0.0/16", # pod/overlay network

"Backend": {

"Type": "vxlan"

}

}Now apply this configuration to the cluster to initiate the Flannel overlay network

# apply to cluster

kubectl apply -f kube-flannel.ymlYou can check that the flannel pods started successfully by running:

kubectl get pods -n kube-flannel

# will show a ready and running kube-flannel-xx-xxxxx container if successful Now you have a running control plane node with a pod overlay network. We are now ready to add worker nodes to the cluster.

Step 5 – Add nodes to the cluster

Adding a worker node is quick and straightforward assuming you have completed all the prerequisite steps for each worker node VM.

For each node, ensure that:

- All prerequisites have been completed from the Prerequisites section

- Ensure Step 1 has been completed for each node, installing kubeadm, the kubelet and optionally kubectl.

Once confirmed, you can run the node joining instructions from when you bootstrapped the cluster with kubeadm.

# install a node

kubeadm join 10.0.0.10:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:1b4da30281a13c5d1a93bf6fce9531bace93e9ccee8201aad375cdf9e41cd13a

# if this fails (24hr expiry) get a new token

kubeadm token list

kubeadm token create

openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | \

openssl rsa -pubin -outform der 2>/dev/null | \

openssl dgst -sha256 -hex | \

sed 's/^.* //'

kubeadm join 10.0.30.125:6443 --token the-new-token \

--discovery-token-ca-cert-hash sha256:1b4da30289a13c5d1c93bf6fce9531bace94e9ccee8201aad375cdf9e41cd13a

# repeat for all nodesNote that if you don’t join the node within 24 hours of creating the control plane node, the initial token for joining the clusterwill have expired.

You won’t be able to join a node to the cluster until you generate and use another token. To generate another token, run the following on the control plane node:

# kubeadm token list (will be empty if the token expired)

kubeadm token create

# save the output

# if you need the discovery token certificate again, run:

openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | \

openssl rsa -pubin -outform der 2>/dev/null | \

openssl dgst -sha256 -hex | \

sed 's/^.* //'

# rerun the following with the new token and discovery token certificate

kubeadm join 10.0.0.10:6443 --token [the-new-token] \

--discovery-token-ca-cert-hash [the-discovery cert]Repeat this for each node you want to join. You should be able to see each new node with:

kubectl get nodesYou should also be able to see a new flannel pod for each node with:

kubectl get pods -n kube-flannelSummary

This concludes this guide to bootstrapping a Kubernetes cluster on Ubuntu 24.x VMs.

The steps completed here were:

- A number of prerequisites, including a number of OS host networking configurations

- Installing the container runtime

- Installing the kubeadm, kubelet and kubectl Kubernetes tools and processes

- Bootstrapping the cluster

- Installing a pod overlay network

- Joining additional nodes

Leave a Reply